OpenAI launches Sora social media app for AI-generated videos, raising 'AI slop' and copyright worries

The company behind ChatGPT released its new Sora social media app on Tuesday, an attempt to draw the attention of eyeballs currently staring at short-form videos on TikTok, YouTube or Meta-owned Instagram and Facebook.

The new iPhone app taps into the appeal of being able to make a video of yourself, doing just about anything that can be imagined, in styles ranging from anime to highly realistic.

But a scrolling flood of such videos taking over social media has some worried about "AI slop" that crowds out more authentic human creativity and degrades the information ecosystem.

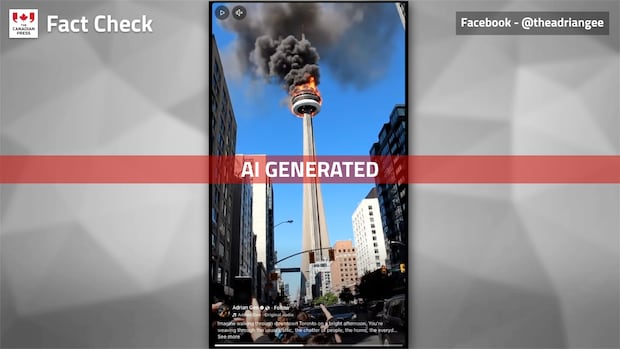

AI-generated video has become sophisticated as the technology has improved — capable of duping viewers. Last week, an AI video of the CN Tower on fire went viral on Facebook, where some users reacted in shock, thinking the video was real. Everything from benign videos of bunnies hopping on trampolines, to deepfakes from supposed wildfire scenes have tricked social media users.

The Sora app's official launch video features an AI-generated version of OpenAI CEO Sam Altman speaking from a psychedelic forest, the moon and a stadium crowded with cheering fans watching rubber duck races. He introduces the new tool before handing it off to colleagues placed in other outlandish scenarios. The app is available only on Apple devices for now, starting in the U.S. and Canada.

Meta launched its own feed of AI short-form videos within its Meta AI app last week. In an Instagram post announcing the new Vibes product, Meta CEO Mark Zuckerberg posted a carousel of AI videos, including a cartoon version of himself, an army of fuzzy, beady-eyed beings jumping around and a kitten kneading a ball of dough.

Both Sora and Vibes are designed to be highly personalized, recommending new videos based on what people have already engaged with.

Jose Marichal, a professor of political science at California Lutheran University who studies how AI is restructuring society, says his own social media feeds on TikTok and other sites are already full of such videos, from a "house cat riding a wild animal from the perspective of a doorbell camera" to fake natural disaster reports that are engaging but easily debunked. He said you can't blame people for being hard-wired to "want to know if something extraordinary is happening in the world."

What's dangerous, he said, is when they dominate what we see online.

"We need an information environment that is mostly true or that we can trust, because we need to use it to make rational decisions about how to collectively govern," he said.

If not, "we either become super, super skeptical of everything or we become super certain," Marichal said. "We're either the manipulated or the manipulators. And that leads us toward things that are something other than liberal democracy, other than representative democracy."

OpenAI made some efforts to address those concerns in its announcement on Tuesday.

"Concerns about doomscrolling, addiction, isolation and [reinforcement learning]-sloptimized feeds are top of mind," it said in a blog post. It said it would "periodically poll users on their well-being" and give them options to adjust their feed, with a built-in bias to recommend posts from friends rather than strangers.

Copyright content on SoraSora also allows users to spin videos from copyrighted content — a move that's likely to ruffle feathers in Hollywood.

Copyright owners, such as television and movie studios, must opt out of having their work appear in the video feed, company officials said, describing it as a continuation of its prior policy toward image generation.

The ChatGPT-maker has been in talks with a variety of copyright holders in recent weeks to discuss the policy, company officials said. At least one major studio, Disney, has already opted out of having their material appear in the app, people familiar with the matter said. Earlier this year, OpenAI pressed the Trump administration to declare that training AI models on copyrighted material fell under the "fair use" provision in copyright law.

"Applying the fair use doctrine to AI is not only a matter of American competitiveness — it's a matter of national security," OpenAI argued in March. Without this step, it said at the time, U.S. AI companies would lose their edge over rivals in China.

OpenAI officials said it put measures in place to block people from creating videos of public figures or other users of the app without permission. Public figures and others' likeness cannot be used until they upload their own AI-generated video and give their permission.

One such step is a "liveness check," where the app prompts a user to move their head in different directions and recite a random string of numbers. Users will be able to see drafts of videos that involve their likeness.

cbc.ca